Microsoft provides container orchestration as a service through its Azure Kubernetes service. Before we dive into our overview of Kubernates in Azure it would be helpful to run through a few concepts on how Kubernates orchestration works.

The following information is based on the information and tutorials available on

Kubernetes (k8s) & Azure Kubernetes Service (AKS)

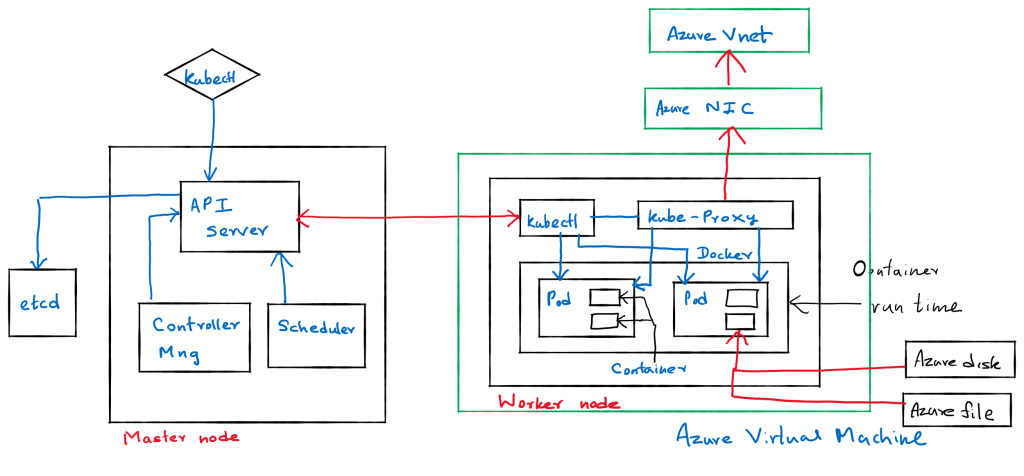

Kubernetes (K8s) Architecture.

Kubernetes orchestration consists of two central components, The master nodes, and the worker nodes.

The Master Node

The Master Node manages the Kubernetes cluster.

You can have more than one Master Node in a cluster. In a high availability scenario, only one of them will be the leader, performing all the operations. While the rest of the Master Nodes would be followers, acting as a redundancy

The Master node has the following components.

API Server

- All the administrative tasks are performed via the API Server within the Master Node.

- A user/operator sends REST commands to the API Server, which then validates and processes the requests.

- After executing the requests, the resulting state of the cluster is stored in the distributed key-value store.

- This component provides the interaction for management tools, such as kubectl or the Kubernetes dashboard.

Scheduler

- The Scheduler schedules the work to different Worker Nodes.

- The Scheduler has the resource usage information for each Worker Node. It also knows about the constraints that users/operators may have set, such as scheduling work on a node that has the label disk==ssd set.

- Before scheduling the work, the Scheduler also takes into account the quality of the service requirements, data locality, affinity, anti-affinity, etc. The Scheduler schedules the work in terms of Pods and Services.

Controller Manager

- The Controller Manager manages different non-terminating control loops, which regulate the state of the Kubernetes cluster.

- Each one of these control loops knows about the desired state of the objects it manages and watches their current state through the API Server.

- In a control loop, if the current state of the objects it manages does not meet the desired state, then the control loop takes corrective steps to make sure that the current state is the same as the desired state.

Etcd

- As discussed earlier, etcd is a distributed key-value store which is used to store the cluster state. It can be part of the Kubernetes Master, or, it can be configured externally, in which case, Master Nodes would connect to it.

Worker Node

A Worker Node is a machine (VM, physical server, etc.) which runs the necessary applications using Pods and is controlled by the Master Node.

Pods are scheduled on the Worker Nodes, which have the necessary tools to run and connect them.

Pods

- A Pod is the scheduling unit in Kubernetes.

- It is a logical collection of one or more containers which are always scheduled together. Also, to access the applications from the external world, we connect to Worker Nodes and not to the Master Node/s.

Worker Node Components:

Container Runtime

- To run containers, a container runtime is needed on the worker node. By default, Kubernetes is configured to run containers with Docker. It can also run containers using the rkt container runtime.

Kubelet

- The kubelet is an agent which runs on each Worker Node and communicates with the Master Node.

- It receives the Pod definition via various means (primarily, through the API Server), and runs the containers associated with the Pod.

- It also makes sure the containers which are part of the Pods are healthy at all times.

The kubelet connects with the Container Runtimes to run containers.

kube-proxy

- Instead of connecting directly to Pods to access the applications, we use a logical construct called a Service as a connection endpoint. A Service groups is related to Pods, which it load balances when accessed.

- kube-proxy is the network proxy which runs on each Worker Node and listens to the API Server for each Service endpoint creation/deletion. For each Service endpoint, kube-proxy sets up the routes so that it can reach to it.

Azure Kubernetes Service (AKS)

AKS provides a single-tenant cluster master. It is managed by the Azure platform. You will only be charged for the AKS nodes that run your applications. AKS is built on top of the open-source Azure Kubernetes Service Engine (aks-engine).

The Azure cluster master includes the following core Kubernetes components, similar to kubernetes architecture:

- kube-apiserver

- etcd – This platform managed cluster master does not requre configuration of a highly available etcd store

- kube-scheduler

- kube-controller-manager

Since this cluster master is managed by Azure you cannot access the cluster master directly.

Upgrades to Kubernetes are orchestrated through the Azure CLI or Azure portal, which upgrades the cluster master and then the nodes.

To troubleshoot possible issues, you can review the cluster master logs through Azure Log Analytics.

Once the nodes are defined and the number and size are decided on, the Azure platform configures the secure communication between the cluster master and nodes.

If you need to configure the cluster master in a particular way or need direct access to them, you can deploy your own Kubernetes cluster using aks-engine.

Nodes in AKS

Nodes in AKS are Azure Azure virtual machine (VM) that runs the Kubernetes node components and container runtime.

The kublet processes the orchestration requests from the cluster master and scheduling of running the requested containers.

Virtual networking is handled by the kube-proxy on each node. The proxy routes network traffic and manages IP addressing for services and pods.

The AKS VM image for the nodes are based on Ubuntu Linux (as of now).

When you create an AKS cluster or scale up the number of nodes, the Azure platform creates the requested number of VMs and configures them. There is no manual configuration for you to perform.

If you need to use a different host OS, container runtime, or include custom packages, you can deploy your own Kubernetes cluster using aks-engine.

Nodes of the same configuration are grouped together into node pools. These node pools consists on vms in AKS wich are nodes. Upgrading or scaling is enacted on such a node pool.

AKS networking

More information available here

AKS provides several options in configuring network access to your nodes.

- To be accessible internally – connected to a virtual network (Advanced networking) with S2S VPN and forced tunneling to an on-prem network.

- To be accessible externally – A public Ip address assigned with several separate configuration options.

- Load balanced – Using the Azure load balancer.

- Configured for ingress traffic for SSL/TLS termination or routing of multiple components.

Services

- In Kubernetes, Services logically group pods to allow for direct access via an IP address or DNS name and on a specific port.

- As you open network ports to pods, the corresponding Azure network security group (NSG) rules are configured.

- For HTTP application routing, Azure can also configure external DNS as new ingress routes are configured.

- Kubernetes uses Services to logically group a set of pods together. The following Service types are available on configuring IP addresses:

Cluster IP – Creates an internal IP address for use within the AKS cluster. Good for internal-only applications that support other workloads within the cluster.

NodePort – Creates a port mapping on the underlying node that allows the application to be accessed directly with the node IP address and port.

LoadBalancer – Creates an Azure load balancer resource, configures an external IP address, and connects the requested pods to the load balancer backend pool. To allow customers traffic to reach the application, load balancing rules are created on the desired ports.

ExternalName – Creates a specific DNS entry for easier application access.

Next, I hope to run through deploying a containerized application in to AKS, configuring and managing a cluster.

Pingback: Orchestratating a containerized application in Azure. – Rav's Musing