A big two comparison. Azure and Amazon Web Services.

The virtual machine is the central computing unit in the infrastructure application of a cloud service.

Each cloud provider provides its own unique architecture on how virtual machines are utilized in the cloud.

I hope to provide an overview of the architecture and components of virtual machines in Microsoft Azure and Amazon Web Service. Furthermore, I will share resources that delve deeper into each of them.

MICROSOFT AZURE

There are several components that encompass the computing resources of an Azure virtual machine. The flexibility in customizing these components differ depending on the resource.

The key resources associated with a virtual machine are:

1. The number of virtual CPUs

2. The amount of RAM.

3. Disk type and storage.

4. The virtual network adapters

5. Private and Or public IP addresses

Virtual Machin SKUs/Types

These are the pre-configured set of options that are available to choose from when creating a virtual machine. These SKUs will decide the:

- Number of virtual CPUs dedicated to the VM

- Amount of RAM dedicated to the VM.

- What purpose/optimization the series of VMs are designed for. The optimizations are:

- General-purpose

- Memory-optimized

- Compute-optimized

- GPU optimized

- High performance compute

- Storage optimized

Outside of the optimization purposes, the types of VMs are grouped based on the underlying hardware, processors, RAM, storage and networking thresholds.

RESOURCE:https://azure.microsoft.com/en-us/pricing/details/virtual-machines/series/

The underlying host processors could differ based on the VM series that is chosen. Microsoft data centers are primarily powered by Intel chips. But according to Bloomberg, “Microsoft’s vice president of cloud computing, Jason Zander, said the company had made a “significant commitment” to ARM servers porting its Windows Server operating system onto ARM-powered designs by Qualcomm and Cavium, which were unveiled at Open Compute”.

RESOURCE:https://docs.microsoft.com/en-us/azure/virtual-machines/windows/sizes

Storage

It is best, that you already have a storage strategy created on how to manage your storage well before creating a VM. Whether you use managed or unmanaged disks, premium or standard storage, and how you will manage permissions would need to be determined well before you start creating a virtual machine.

The primary administrative container for storage is the storage account.

Unmanaged disks are where you create your own storage account or specify a storage account when you create the disk at VM creation. This places the responsibility of managing storage, its permissions, and being aware of storage thresholds on you.

Managed disks handle the storage account creation and management in the background for you, and ensures that you do not have to worry about the scalability limits of the storage account.

Next, you will need to determine what type of storage you would use, with the choices being an SSD (Premium storage) or an HDD (Standard Storage). With each having significantly different levels of performance. The VHDs used in Azure are .vhd files stored as page blobs in a standard or premium storage account in Azure.

Outside of the storage for the system disk, you also have the option of attaching other disks and SMB file shares, to expand your storage.

The operating system disk is mandatory for a VM. It’s registered as a SATA drive and labeled as the C: drive by default. This disk has a maximum capacity of 2048 gigabytes (GB).

Each VM includes a temporary disk. The temporary disk provides short-term storage for applications, processes, and is intended to only store data such as page or swap files. Data in the temporary disk may be lost during a maintenance event or when you redeploy the VM. During a standard reboot the data on the temporary drive persists. Temporary storage is based on the underlying host machines storage which changes when a machine is redeployed.

A data disk is a VHD that’s attached to a virtual machine to store data that is persistent. Data disks are registered as SCSI drives and are labeled with a letter of your choosing. Each data disk has a maximum capacity of 4095 GB. The SKU of the virtual machine determines how many data disks you can attach to it and the type of storage you can use to host the disks.

You also have the option of creating a file share, in a storage account and attaching it to an Azure VM or any other machine in or outside of Azure.

RESOURCE:https://docs.microsoft.com/en-us/azure/storage/common/storage-introduction

Connectivity

Connectivity to an Azure VM is performed using the virtual network adapter connected to the VM. This virtual adapter has three primary elements.

- A private IP address assigned to the virtual adapter, based on a virtual network you connect to and its subnet address space created by you.

- A public IP address assigned to your virtual adapter. Which you can either keep as a static IP or be dynamic by default.

- An NSG or Network Security Group that can be attached to your network adapter designating which protocols and ports have access to and from your VM. (NOTE: NSGs can also be associated with subnets in Azure)

RESOURCE:https://docs.microsoft.com/en-us/azure/virtual-network/virtual-network-network-interface

Maintenance and Availability.

In the IaaS application of cloud computing, when a VM is deployed, updating, patching and general maintenance of a virtual machine is the sole responsibility of the administrator.

The maintenance and upkeep of the underlying hardware at the data center, the host OS, and the Azure management fabric remains the responsibility of Microsoft (Cloud provider).

The majority of these updates are performed without any impact to the hosted virtual machines. However, there are cases where updates do have an impact on hosted VMs.

Outside of your control, and in control of Microsoft, rests the possibility of planned and unplanned maintenance events.

Planned maintenance is handled in two ways.

- Memory preserving update, where a VM goes into a paused state for 30 seconds. This can impact you depending on the nature of the application running on your VM. (stateless vs stateful)

- If and When VMs need to be rebooted, and memory preserving updates are not sufficient for planned maintenance, you are notified in advance of a time in which your VM will be unresponsive. Planned maintenance of this nature can be handled in two phases:

- The self-service window

- Scheduled maintenance window.

Furthermore, you have the risk of unplanned maintenance, due to hardware or software failure that might impact your virtual machines.

To Avoid both these scenarios, it’s best to use multiple VMs in availability sets with load balances.

It’s also vital that when choosing the VM SKU you look through the SLA (Service Level Agreement) for best possible uptime guarantees.

For SQL servers you have the option of configuring always on availability groups in Azure virtual machines.

RESOURCE:https://docs.microsoft.com/en-us/azure/virtual-machines/windows/manage-availability

Linux Virtual Machine

Linux VM architecture and the components that encompass a VM do not change from the above-mentioned components. You do have a few separate Linux based features available when creating a Linux VM. In my time as a Premier Field Engineer in Azure, one of the roadblocks that Linux administrators using Azure faced was the lack of console access to their Linux VMs. In May of this year, the public preview of console access was announced to the joy of many Linux admins.

This again is one of the many extraordinary steps taken by Microsoft to partner up with the Linux community.

RESOURCE:https://azure.microsoft.com/en-us/blog/virtual-machine-serial-console-access/

AMAZON WEB SERVICE

Amazon’s virtual computing service is EC2. EC2 stands for elastic computing. EC2 virtual computing environments are known as instances. These nomenclatures though commonly used interchangeably matter in being able to navigate the AES console and understand their documentation better.

The components associated with the virtual machine are:

- Preconfigured templates for your instances, that package the bits you need for your server including the operating system and additional software. – Amazon Machine Images

- Various configurations of CPU, memory, storage, and networking capacity for your instances. – Instance Types

- Storage volumes

- Networking performance

Instance Types

These are similar to the SKUs in Azure. Instance types comprise varying combinations of CPU, memory, storage, and networking capacity and give you the flexibility to choose the appropriate mix of resources for your applications.

Just as in Azure, the instance types are categorized into the following optimization.

- General-purpose

- Memory-optimized

- Compute-optimized

- Accelerated-computing

- Storage optimized

Within the instance types, and optimization, the instances are grouped further based on the underlying hardware available. As an example, the general purpose M5 instance types have 2.5 GHz Intel Xeon® Platinum 8175 processors with new Intel Advanced Vector Extension (AXV-512) instruction set. This Type of instance has 12 separate offerings to choose from.

The individual instance outside of the optimization and instance types vary based on several factors.

- Number of VCPUs

- Amount of Memory

- Storage Options

- Network Performance Options.

RESOURCE: https://aws.amazon.com/ec2/instance-types/

Storage

Your primary storage service for virtual instances on Amazon is Amazon EBS (Elastic Block Storage)

Amazon EBS provides durable, block-level storage volumes that you can attach to a running instance. You can use Amazon EBS as a primary storage device for data that requires frequent and granular updates. An EBS volume behaves like a raw, unformatted, external block device that you can attach to a single instance. The volume persists independently from the running life of an instance. After an EBS volume is attached to an instance, you can use it like any other physical hard drive.

Amazon EBS provides the following volume types:

SSD-backed volumes optimized for transactional workloads involving frequent read/write operations with small I/O size, where the dominant performance attribute is IOPS

- General Purpose SSD (gp2) volumes offer cost-effective storage that is ideal for a broad range of workloads.

- Provisioned IOPS SSD (io1) volumes are designed to meet the needs of I/O-intensive workloads, particularly database workloads, that are sensitive to storage performance and consistency.

HDD-backed volumes optimized for large streaming workloads where throughput (measured in MiB/s) is a better performance measure than IOPS

- Throughput Optimized HDD (st1) volumes provide low-cost magnetic storage that defines performance in terms of throughput rather than IOPS. st1 is hot storage equivalent.

- Cold HDD (sc1) volumes with a lower throughput limit than st1, sc1 is a good fit ideal for large, sequential cold-data workloads. If you require infrequent access to your data and are looking to save costs, sc1 provides inexpensive block storage (cold storage equivalent)

Amazon EC2 Instance Store (Azure Temporary Disk)

Many instances can access storage from disks that are physically attached to the host computer. This disk storage is referred to as instance store. Instance store provides temporary block-level storage for instances. The data on an instance store volume persists only during the life of the associated instance; if you stop or terminate an instance, any data on instance store volumes is lost. For more information, see Amazon EC2 Instance Store.

Amazon EFS File System (Azure File share)

Amazon EFS provides scalable file storage for use with Amazon EC2. You can create an EFS file system and configure your instances to mount the file system.

Amazon S3

You can use Amazon S3 to store backup copies of your data and applications. Amazon EC2 uses Amazon S3 to store EBS snapshots and instance store-backed AMIs.

RESOURCE:https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/EBSVolumeTypes.html?icmpid=docs_ec2_console

Connectivity

An elastic network interface (ENI) is a logical networking component in a VPC that represents a virtual network card for an instance. You have the ability to detach and attach these ENIs as you wish.

The primary features of the ENI are:

- A primary private IPv4 address from the IPv4 address range of your VPC

- One or more secondary private IPv4 addresses from the IPv4 address range of your VPC

- One Elastic IP address (IPv4) per private IPv4 address

- One public IPv4 address

- One or more IPv6 addresses

- One or more security groups

Private IPv4 Addresses

An instance launched in a VPC receives a primary private IP address from the IPv4 address range of the subnet.

Public IP Addresses

When you launch an instance in EC2, AWS automatically assign a public IP address to the instance from the EC2 public IPv4 address pool. You cannot modify this behavior

Elastic IP Addresses (IPv4)

An Elastic IP address is a public IPv4 address that you can allocate to your account. (Note: account as a whole, that you can associate to different instances at different times). You can associate it to and from instances as you require, and it’s allocated to your account until you choose to release it.

The number of ENIs that you are able to attach vary based on the instance type.

Security Group.

A security group acts as a virtual firewall that controls the traffic for one or more instances, Just as NSGs in Azure. When you launch an instance, you can associate one or more security groups with the instance. You add rules to each security group, ports and protocols, that allows traffic to or from its associated instances.

RESOURCE:https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/using-eni.html

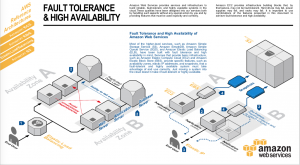

Maintenance and Availability.

Amazon provides the scheduled events functionality for greater visibility into the timing of reboots of Amazon services. In addition to added visibility, in most cases, you can use the scheduled events to manage reboots on your own schedule, so as to be able to control the impact of any downtime.

For unplanned maintenance and fault tolerance, your best option is the use of availability zones. When you launch an EC2 instance, you can select an availability zone or let AWS choose one for you. If you distribute your instances across multiple availability zones and one instance fails, you can design your application so that an instance in another availability zone can handle requests.

You can use Elastic IP addresses to mask the failure of an instance in one availability zone by rapidly remapping the address to an instance in another availability zone.

RESOURCE:https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/using-regions-availability-zones.html

It is worth noting that in Azure the availability sets feature automatically picks the underlying hardware that supports the VM. This is done so that the VMs are placed in separate update domains and fault domains to establish availability and fault tolerance. In AWS you must proactively place your VMs in sperate availability zones at creation for high availability and plan your resources to fit an architecture that supports fault tolerance and redundancy.

Final Thoughts

The bitter truth when it comes to availability, fault tolerance and redundancy is that it has to be a scenario that best fits the application or services you are deploying into the cloud.

If the application or services you are hoping to run are stateful, then a maintenance shutdown would have a high negative impact on your service. The only way to avoid this would be to have a planned carry over or use the self-service window in Azure or scheduled events in AWS to control the impact.

If they are stateless applications or services, availability sets/availability zones with multiple VMS backed by a load balancer can easily mitigate the impact.

Without an option such as “maintenance mode” in VMware, where a guest OS could be migrated temporarily while host maintenance is done, it is a reality in the cloud, as well as in any data center to have the possibility of unplanned downtime. It’s up to you to architect and have a plan to best handle such a scenario in the cloud. Just as you would for a scenario in your own data center.

I am partial to Azure. I find management and the big picture approach to managing resources in Azure much simpler. It was also my first plunge into a cloud computing platform and remains the better of the two services. However, both these cloud providers provide extremely functional, outstanding, sets of features and tools for cloud computing. While AWS holds the majority of the market and Microsoft earns the highest growth rate, as consumers we can be happy that healthy competition has kept innovation in both these corporations at the cutting edge, benefiting only the consumers.